Almost all of us have questioned whether we can trust the answers provided by generative AI. On one hand, it’s unclear whether the data used for training, such as newspaper articles and blog posts, is factually correct. On the other hand, there is the question of how much bias is embedded in large language models. Clearly, none of us want bias in our answers. But what is bias? Who actually determines what bias is? Prejudices shape us as individuals and as a society and are simply a part of reality. An example: More men than women work in leadership positions. We all probably agree that this isn’t good. But it is the reality. Should generative AI reflect the world as it is, or as it ideally should be? And what does such an ideal world look like? Is the notion that there should be an equal number of men and women in leadership positions not also a bias — albeit a positive one? There are various initiatives, such as AI alignment, to integrate societal values into large language models. This also enables organizations to ensure that the AI tools they use comply with internal business rules and compliance requirements. However, training large language models entirely without bias will hardly be possible. Because just like with humans, learning inherently involves bias.

Although all major language models have ethical safeguards, the media regularly reports on so-called jailbreaks. Jailbreaking involves circumventing content moderation guidelines (e.g., on topics like racism and sexism) through specific text prompts. In the best case, the responses provoked by jailbreaking are simply amusing; in the worst case, the incorrect (pricing information, binding commitments) and damaging (sexism, racism) responses can have legal and financial consequences for companies. Guardrails are used to try to limit usage to intended areas and prevent the chatbot from being “tricked”. Content filters for questions and answers can ensure that no undesirable topics are discussed. Additionally, it may be useful for large language models to always include a disclaimer in their responses, indicating that the answers should be verified by the user.

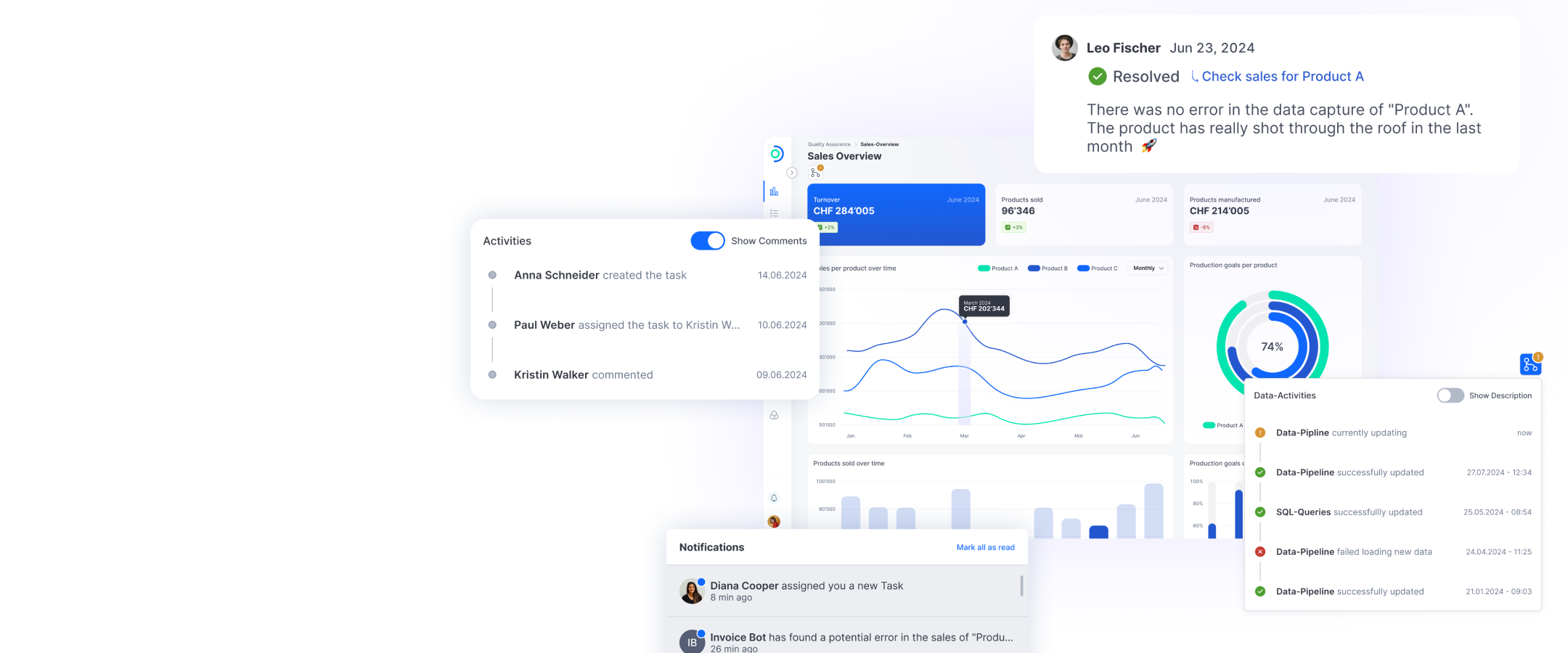

The problem of so-called hallucinations, that is, generating false or invented information due to data gaps, has also not yet been satisfactorily resolved. Data gaps arise, for example, because the datasets used for training are outdated. If an organization uses an AI chatbot linked to a large language model, the data used may be months or even years old. New and specific information about products and services is missing, leading to incorrect answers that undermine the trust of customers and employees in the technology and ultimately in the organization. This problem can be addressed with Retrieval Augmented Generation (RAG): RAG includes current information or other additional knowledge sources such as internal company data without the need to train large language models with the new datasets. One wants to utilize the diverse capabilities of large language models, but not rely on their “knowledge”. Since many organizations still distrust AI-generated responses, a “human in the loop” is often embedded in the processes.

Another challenge is the granular authorization of users. Not all employees or customers should have access to the same, often highly sensitive data. Ways must be found to embed sensitive internal company data in such a way that employees and customers only receive answers to their prompts that are intended for them.