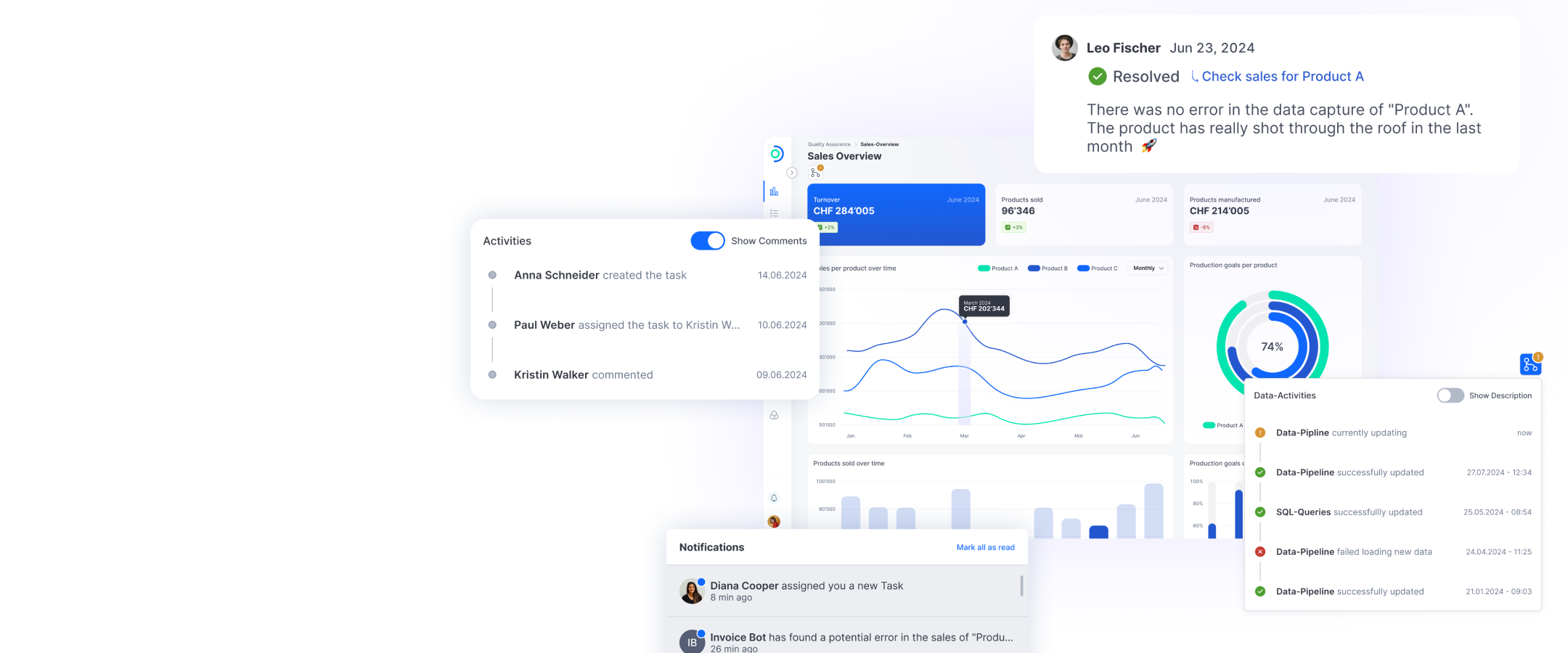

This image was generated by the DALL-E 2 AI software with the following prompt: “Create an image that effectively communicates the risks that artificial intelligence poses to companies’ cyber security. The composition should show a virtual landscape illustrating a company’s digital infrastructure, with various data streams flowing through it. Integrate visual elements such as data leaks and threatening hackers or intruders that try to infiltrate into the system. The image should have a futuristic and dynamic style with a mix of bright and dark colors to convey a sense of urgency and vulnerability.”

Over the past two years, the general population has been able to see and make use of advances in AI. Tools like Dall-E or Midjourney let people create fake images or works of art that can look authentic. And ChatGPT offers easy access to text-based AI. These models have become very popular because they are available to use free of charge. (Most are subject to fees from a certain level of usage.) For example, it took ChatGPT just five days to reach one million users. And everyone wants a piece of the cake. Google, for example, is increasingly positioning itself as an AI company, rather than a cloud company. This transformation among big-name vendors is creating more competition. As a result, AI is increasing and improving and will probably soon find its way into everyday commercial products.

It’s clear that having easier access to AI is transforming our everyday lives and work. What’s less clear is the path this transformation will take. The benefits of many other technologies are still unclear even years later, or were at least limited to very specific applications (such as blockchain). However, the past two years have made it obvious that AI is relevant in many different areas. And new use cases are emerging all the time. Many companies are already launching AI-supported products with promising features. These products create texts and presentations based on existing content or instructions, sort emails, and even answer them in some cases.

Cyber security: AI as an ally ...

Information security is another area where AI is often used. It helps detect threats and weak points more quickly and enables automated monitoring and responses that are faster and more efficient. One possible result of this is that analysts in a level 1 security operation center — who often have a stressful and uncreative job anyway — can carry out other tasks while an AI model analyzes the data. The idea of handing certain tasks over to AI models entirely may sound attractive. But it’s always important to know exactly how the models work and what happens to the data you enter.

... and a new source of threats

Because one of the biggest questions about the use of AI is the lack of human oversight. Even though models can now carry out complex tasks, they are not perfect and can reach incorrect conclusions. Human oversight and decision-making must be integrated into the process. This will make it possible to check that the results generated by these models are correct. In fact, AI-based models generate incorrect information relatively frequently, because they try to cover up their “ignorance” by providing wrong answers.

Preventing the misuse of data is a big challenge for information security in the context of AI-based tools and other platforms that process data. AI models can be made to reveal information that is not intended for the public. Or they might use information for an inappropriate purpose. There is also a risk that AI could mislead users to reveal information that, for example, is not intended for use outside the company. There are already cases where employees transferred confidential company information into AI models — and the data then became publicly available.

Dangerous momentum

The widespread use of AI tools has only just begun. AI’s high degree of complexity makes it difficult to predict how risky it might be for cyber security. Users without the relevant background knowledge find it difficult to understand the complexity of AI. But even experts can’t always explain why AI models make a certain statement or decision. There is a risk that AI will gain momentum on its own and we won’t be able to manage it appropriately.

So what are the biggest concerns in terms of AI?

We asked ChatGPT. Its answer was: job losses, ethics and responsibility, privacy and data protection, bias and discrimination, autonomous weapon systems, and super intelligence. So it’s good to see that AI has answers on critical and controversial topics. And there are plenty of well-intentioned initiatives, too. For example, AI providers are working on filters and rules that determine which questions their models can answer and which they cannot.

But we should also assume that an unfiltered AI model — one that has no ethically or legally defined rules — will find its way into the wrong hands. Whether these are organizations with close links to government, or criminals, sooner or later, someone will successfully train a version of AI for their specific needs and will misuse it. There are already ways of using AI for cyber attacks. Recently, spear phishing has suddenly been targeting many people in an organization rather than individuals. In future, AI models could be used both to find security vulnerabilities and to exploit them.

Just speculation

No one — not even ChatGPT — can answer the question of whether, when, and how these risks will emerge. Like other tools, AI can improve our everyday professional and personal lives. But in the wrong hands, it can quickly become a new threat. It will be interesting to see what restrictions vendors place on their AIs and how efficient these restrictions prove to be. AI researchers are already taking a critical stance on the situation and calling for a halt to development, or for regulation by governments. Time will tell whether this is necessary and whether such measures are in fact effective enough.